(Special thanks to Denny Lee for reviewing this)

Apache Hadoop is an open source project composed of several software solutions for distributed computing. One interesting component is Apache Hive that lets one leverage the MapReducing powers of Hadoop through the simple interface of HiveQL language. This language is basically a SQL lookalike that triggers MapReduces for operations that are highly distributable over huge datasets.

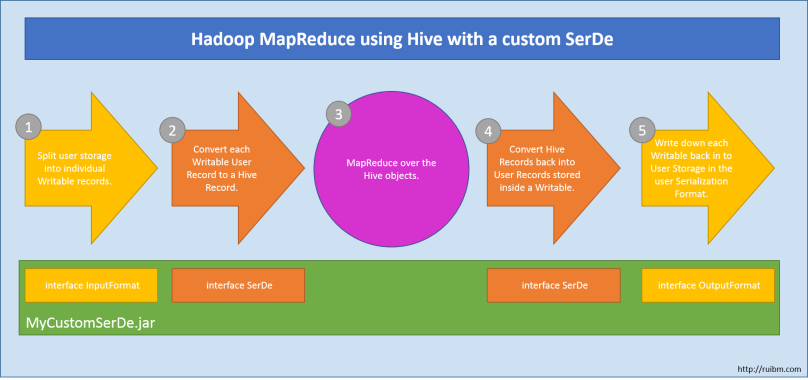

A common hurdle once a company decides to use Hadoop and Hive is: “How do we make Hadoop understand our data formats.”. This is where the Hadoop SerDe terminology kicks in. SerDe is nothing but a short form for Serialization/Deserialization. Hadoop makes available quite a few Java interfaces in its API to allow users to write their very own data format readers and writers.

Step by step, one can make Hadoop and Hive understand new data formats by:

1) Writing format readers and writers in Java that call Hadoop APIs.

2) Packaging all code in a java library – eg., MySerDe.jar.

3) Adding the jar to the Hadoop installation and configuration files.

4) Creating Hive tables and explicitly set the input format, the output format and the row format.

Before diving into the Hadoop API and Java code it’s important to explain what really needs to be implemented. For concisiveness of terms I shall refer to row as the individual unit of information that will be processed. In the case of good old days SQL databases this indeed maps to a table row. However our datasource can be something as simple as Apache logs. In that case, a row would be a single log line. Other storage types might take complex message formats like Protobuf Messages, Thrift Structs, etc… For any of these, think of the top-level struct as our row. What’s common between them all is that inside each row there will be sub-fields (columns), and those will have specific types like integer, string, double, map, …

So going back to our SerDe implemention, the first thing that will be required is the row deserializer/serializer (RowSerDe). This java class will be in charge of mapping our row structure into Hive’s row structure. Let’s say each of our rows corresponds to a java class (ExampleCustomRow) with the three fields:

- int id;

- string description;

- byte[] payload;

The RowSerDe should be able to mirror this row class and their properties into Hive’s ObjectInspector interface. For each of our types it’ll find and return the equivalent type in the Hive API. Here’s the output of our RowSerDe for this example:

- int id -> JavaIntObjectInspector

- string description -> JavaStringObjectInspector

- byte[] payload -> JavaBinaryObjectInspector

- class ExampleCustomRow -> StructObjectInspector

In the example above, the row structure is very flat but for examples where our class contains others classes and so forth, the RowSerDe needs to be able to recursively reflect the whole structure into Hive API objects.

Once we have a way of mapping our rows into hadoop rows, we need to provide a way for hadoop to read our files or databases that contain multiple rows and extract them one by one. This is done via de Input and Output format APIs. A simple format for storing multiple rows in a file would be separating them by newline characters (like comma separated files do). An Input reader in this case would need to know how to read a byte stream and single out byte arrays of individual lines that would later be fed into to our custom SerDe class.

As you can probably imagine by now, the Output writer needs to do exactly the opposite: it receives the bytes that corresponds to each line and it knows how to append them and separate them (by newline characters) in the output byte stream.

Summarizing, in order to implement a complete SerDe one needs to implement:

1) The Hive Serde interface (contains both the Serializer and Deserializer interfaces).

2) Implement the InputFormat interface and the OutputFormat interface.

In the next post I’ll take a deep dive into the actual Hadoop/Hive APIs and Java code.

(Two years have gone by and I unfortunately never got round to writing anything else. Probably, anything I would write now would be outdated so I would encourage anyone who has questions to try to ping me directly or just ask directly the the hive community)